The somewhat unlikely partnership Microsoft & RedHat is behind the cool technology [KEDA, allowing an event-driven and serverless-ish approach to running things like Azure functions in Kubernetes.

Would it not be cool if we could run Azure functions in a Kubernetes cluster and still get scaling similar to the managed Azure Functions service. KEDA address this and will automatically scale/spin-up the pods based on a Azure Function trigger. And remove them again when not needed anymore of course.

This does not work with all Azure Function triggers but the queue ones are supported (RabbitMQ, Azure ServiceBus/Storage Queues and Apache Kafka).

Let’s try it!

As with all new technologies in the microservice space, setting up a test-rig is easy! In my test i will use:

- Kubernetes cluster in Azure AKS

- Apache Kafka cluster

- KEDA (Kubernetes Event Driven Architecture)

- Azure Function (running in Docker)

1 – Deploy a Apache Kafka cluster using Helm.

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

kubectl create namespace kafka

helm install kafka -n kafka --set kafkaPassword=somesecretpassword,kafkaDatabase=kafka-database --set volumePermissions.enabled=true --set zookeeper.volumePermissions.enabled=true bitnami/kafka

2 – Create a Kafka Topic

kubectl --namespace kafka exec -it kafka-0 -- kafka-topics.sh --create --zookeeper kafka-zookeeper:2181 --replication-factor 1 --partitions 1 --topic important-stuff

3 – Deploy KEDA to Azure AKS cluster

The Azure functions cli tool has functionality to deploy KEDA to your cluster but here i will use Helm. The end result is the same.

helm repo add kedacore https://kedacore.github.io/charts

helm repo update

kubectl create namespace keda

helm install keda kedacore/keda --namespace keda

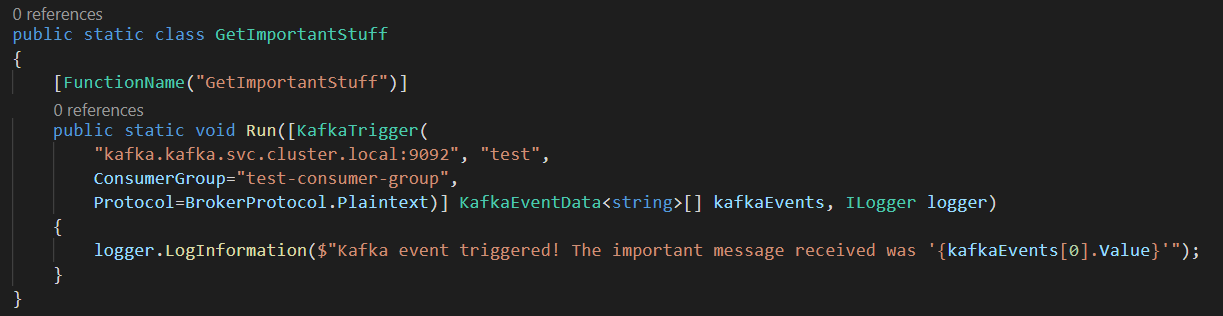

4 – Create an Azure Function

func init KedaTest

#Add the Kafka extension as this will be our trigger

dotnet add package Microsoft.Azure.WebJobs.Extensions.Kafka --version 1.0.2-alpha

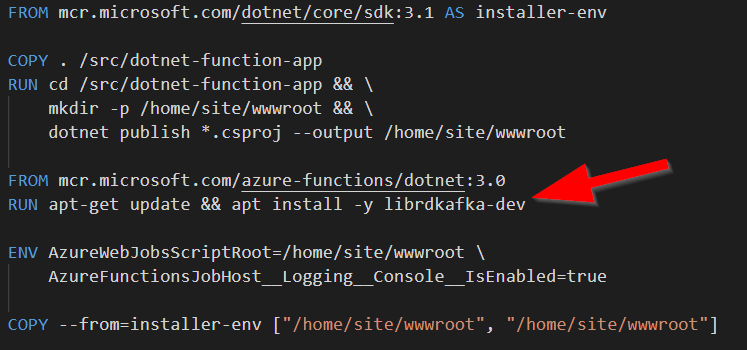

5 – Add Dockerfile to function app

func init --docker-only

The Dockerfile generated will not have the prereq required by the Kafka extension in Linux. So we need to modify the Dockerfile to get the dependency librdkafka installed.

RUN apt-get update && apt install -y librdkafka-dev

I also updated the .Net Core SDK docker image to version to 3.1

6 – Deploy the Azure function

The functions cli tool do have built in functionality to create the necessary Kubernetes manifests as well as applying them. To generate them without applying them use the -“–dry-run” parameter

func kubernetes deploy –name dostuff –registry magohl –namespace kafka

Sweet – we have our function deployed. But is it running?

A kubectl get pods shows no pods running. Lets wait for KEDA to do some auto-scaling for us!

6 – Test KEDA!

We will watch pods created in one window. Send some test messages in another and look at the logs in a third window.

Cool! KEDA does watch the Kafka topic and will schedule pods when messages appear. After a default of 5 minutes the pods will be terminated.